Duration: 36 months

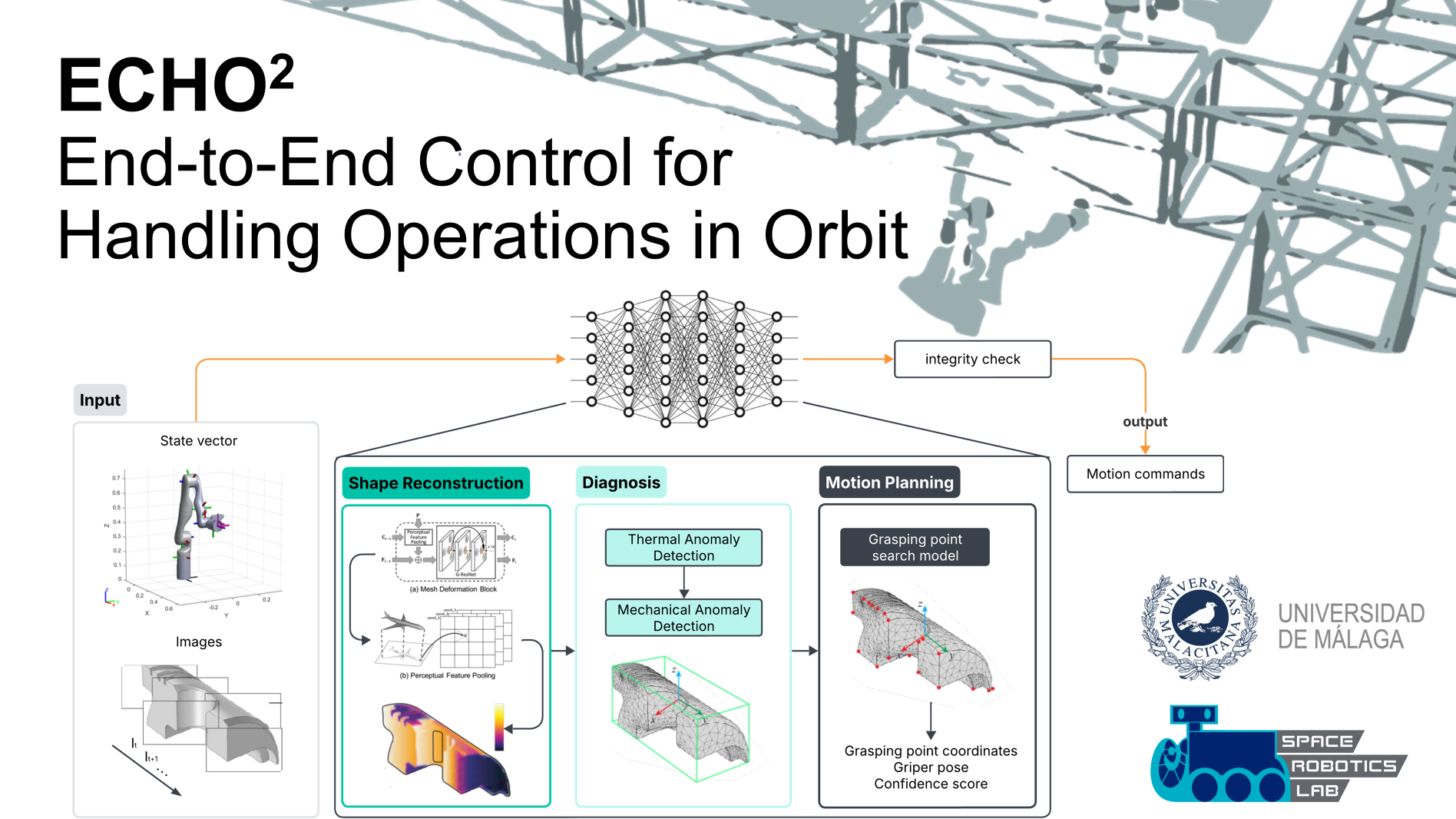

During the operation of the ISS, critical structural and servicing components must be constantly monitored, maintained, and sometimes repaired through extravehicular activities. The cost, risk, and logistical complexity associated are substantial compared to the alternative of inspecting, diagnosing, and repairing fully autonomously with robots. Current in-space robotic servicing methods heavily rely, however, on standardized interfaces, visual markers, and preset instructions, which are effective for controlled assembly tasks. In contrast, inspecting and repairing vital Large Space Structures (LSS) elements may not be compatible with standard markers and interfaces. Robotically inspecting and repairing LSS presents unique challenges due to the diversity of components involved and the unforeseeable nature of these tasks, making current approaches impractical at scale. Additionally, the manipulator's motion must compensate for adverse dynamic interactions. We propose developing an end-to-end markerless visuomotor pipeline that enables robotic systems to autonomously inspect, diagnose, and repair diverse components, regardless of their shape or orientation, without markers or standardized interfaces. Our solution introduces three key innovations: 1) a markerless vision system allowing robots to identify and manipulate components based on inherent features; 2) a versatile and efficient optimal grasping point search method; and 3) a perception-aware motion planning and control enabling adaptation to off-nominal conditions. To validate this technology, we will demonstrate robotic inspection and repair tasks on a scaled-down mock-up of an LSS on a free-floating testbed. A robotic arm will autonomously inspect, diagnose, and, as necessary, plan a course of action to repair components of varying sizes and shapes, demonstrating the robot's ability to execute complex, long-horizon tasks without human intervention.