Running

Duration: 18 months

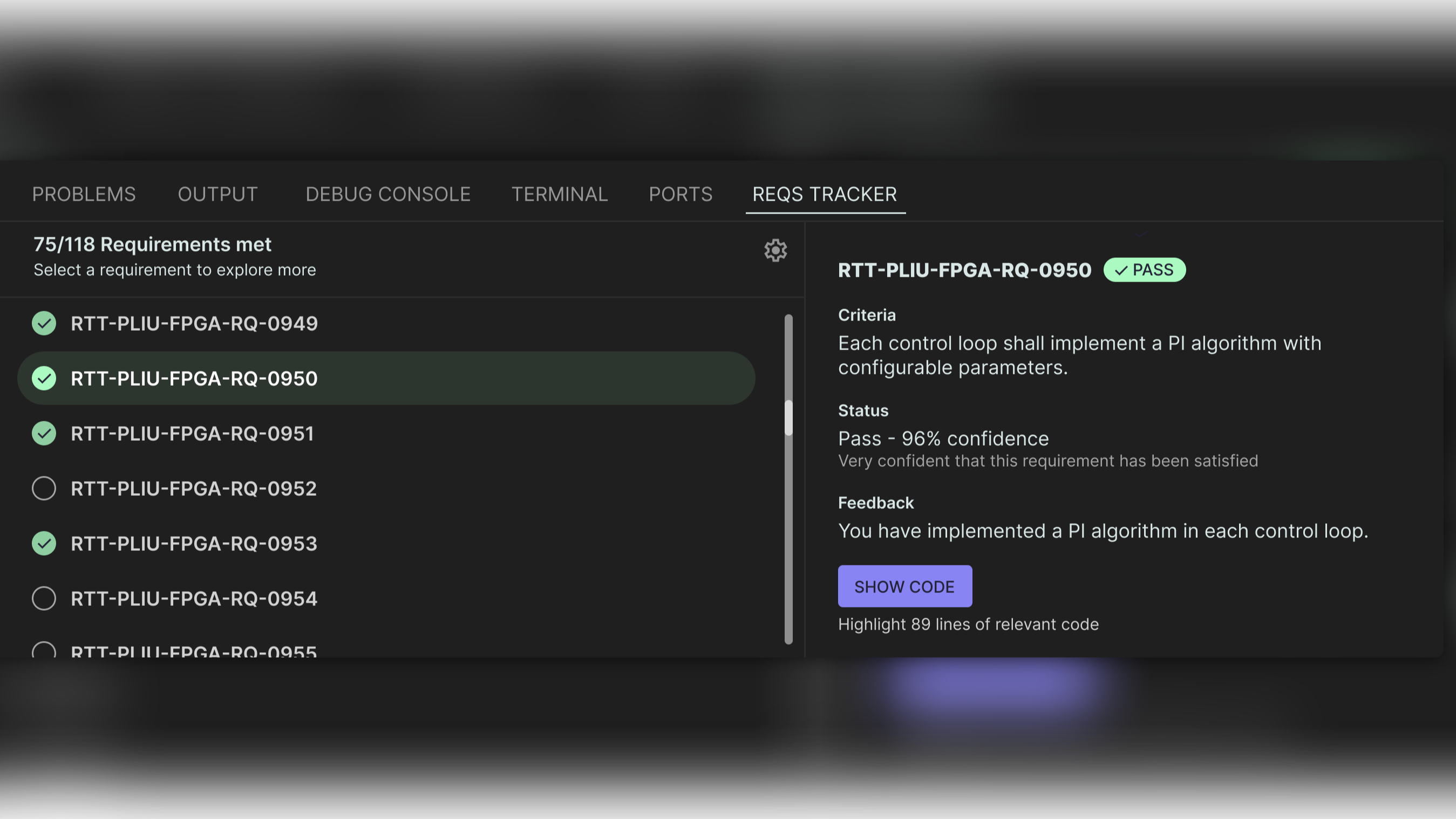

FPGA verification remains a critical bottleneck, with 84% of designs experiencing non-trivial bug escapes into production. The root cause often lies not in faulty logic, but in requirement-related issues, such as outdated, ambiguous, or incorrectly implemented specifications. Traditional simulation and coverage-driven methods struggle to detect these mismatches due to limited and poor traceability between specification and RTL. To address this, we propose a novel AI-assisted verification approach that uses Large Language Models (LLMs) to reason about hardware designs in the context of their specifications. This system identifies full, partial, or missing implementation of requirements, uncovers ambiguity, and enables AI-driven functional coverage by directly linking natural-language intent to design logic. Powered by retrieval-augmented generation (RAG) and fine-tuned on hardware datasets and human evaluations, the model analyses both code and specifications with high contextual accuracy. The solution will be delivered as a secure, open-weight LLM-based tool, deployable on-premises or in the cloud. It is intended to be available both as a web interface and an Visual Studio Code extension, offering real-time insight during development. This approach not only enhances design confidence but redefines how verification is performed, from reactive testing to proactive, semantic validation.