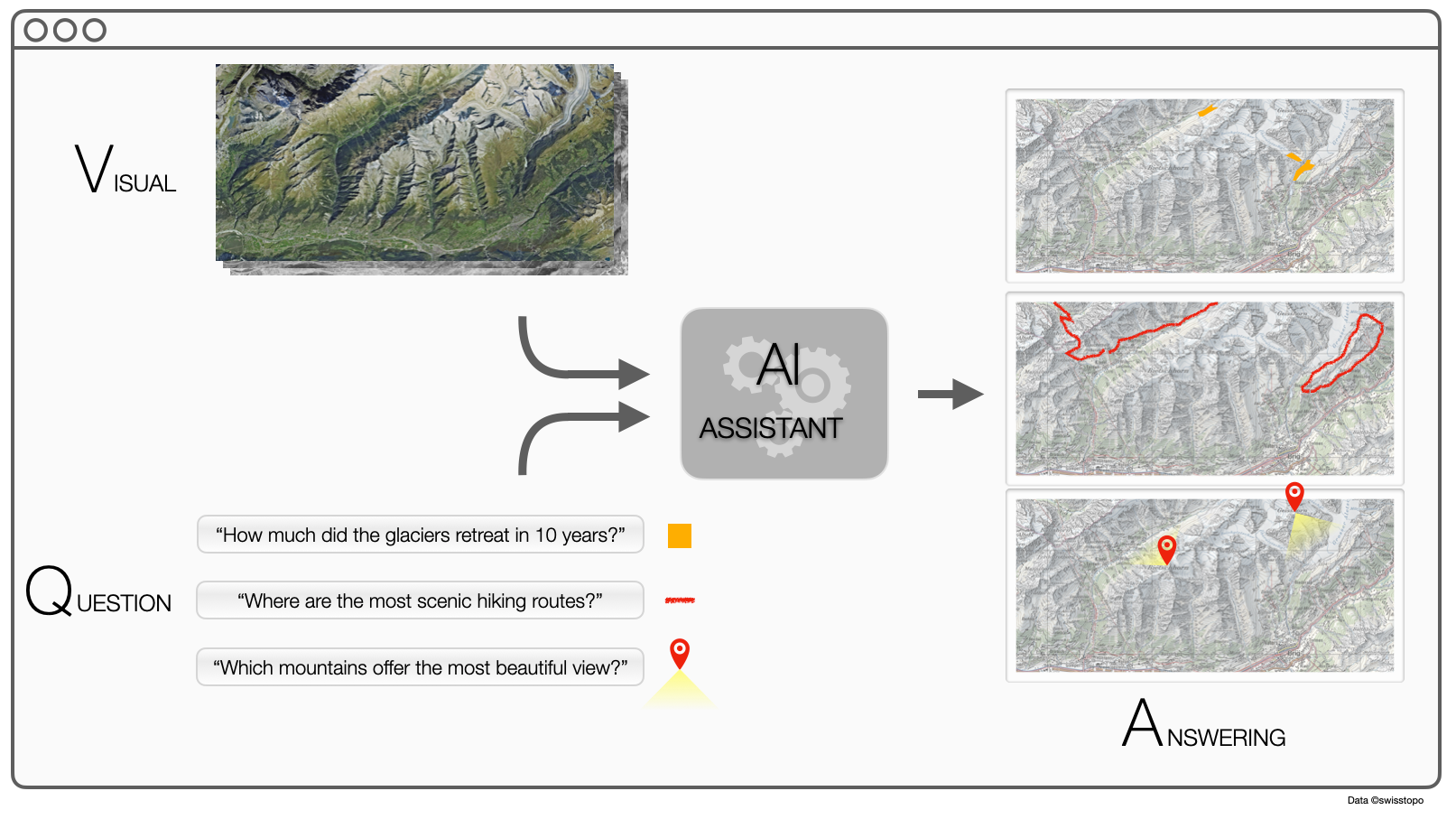

We want to make remote sensing images content accessible to everyone. We will create an ‘AI assistant’ that interprets questions formulated in English about the content of the images and predict the answer sought by the user (see picture). With a single model, it will answer questions about land changes in time series, objects presence or counting. Besides a new way to interact with ESA products, it is a crucial step towards a Digital Twin of the Earth, where the globe can be queried for information-on-demand through satellite imaging. Such an AI assistant does not exist yet. To create it, one needs to develop ground breaking technology using both computer vision and remote sensing – to extract visual information from images - and natural language processing (NLP) – to obtain semantic information from the question. The two sources need to be fused to extract the information required, a process known in vision as Visual Question Answering [Antol, ICCV 2015]. The only attempt towards a VQA system for remote sensing has been recently proposed by my team [Lobry, TGRS 2020]. With this project, we want to explore the possibility to handle spatio-temporal image queries, therefore usable to extract information about change and dynamics. Decision makers and journalists, for example, could be users of such system. The technical challenges are - Create a multi-temporal datasets with Sentinel-2 pairs - Generate automatically a ground truth database of image-question-answers triplets following the CLEVR protocol (Johnson, CVPR 2017) - Develop a full end-to-end model to process the triplets, based on latest advances in NLP (e.g. self attention [Xu, ICML 2015]) and multitemporal remote sensing [Daudt, ICIP 2018] - Explore program inference for logical processing of the questions [Johnson, ICCV 2017] The project (EPFL PhD thesis) will lead to a first demonstrator of such AI assistant, addressing questions about dynamics in the Swiss Alps, e.g. glaciers movements and land use changes.