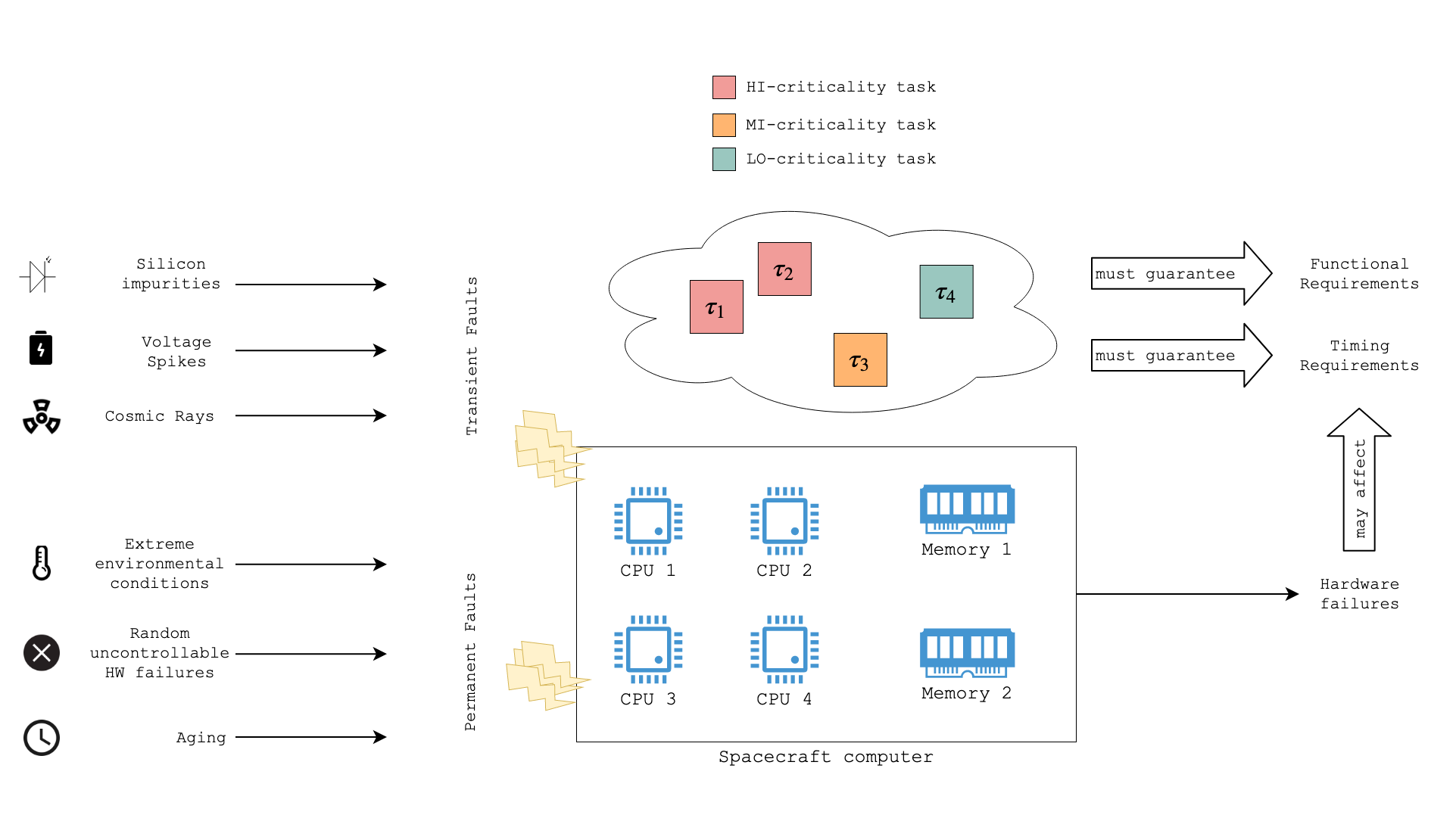

Computer architectures evolved considerably in the last years, introducing several advanced features to overcome the single-core performance barrier. This incremental complexity makes difficult their use in critical hard real-time systems, which require strict design requirements. At the same time, these new architectures would make possible to consolidate several applications in one single computing platform, possibly with different criticality requirements. This is very attractive for the space domain to reduce the on-board weight and cost, and it is also required by the recent software, which is more and more computational power-hungry. Moving to more recent computing technologies and consolidating applications with different criticality on the same platform is then a necessary but very challenging step to improve all space activities. In addition, spacecraft computers must comply with strict fault-tolerance requirements, that can no more be satisfied only by the traditional approaches, due to the high overhead cost.

Software fault-tolerant mechanisms are well-known and already available in scientific literature and industrial solutions. However, their effect on real-time scheduling has not been properly studied. Furthermore, the coexistence of different criticality applications makes not only the real-time scheduling challenging, as also shown by several misconceptions in academia papers, but creates new unexplored research questions related to the fault-tolerance.

The research aims to:

- identify the current limits and misconceptions on the implementation of mixed-critical approach to real systems;

- investigate the effect of fault-tolerant mechanisms when applied to real-time scheduling and develop new techniques for a joint failure-scheduling analysis;

- stimulate the beginning of a standardization process that will allow the use of novel architectures and mixed-criticality approaches in the next generation of spacecraft computers.