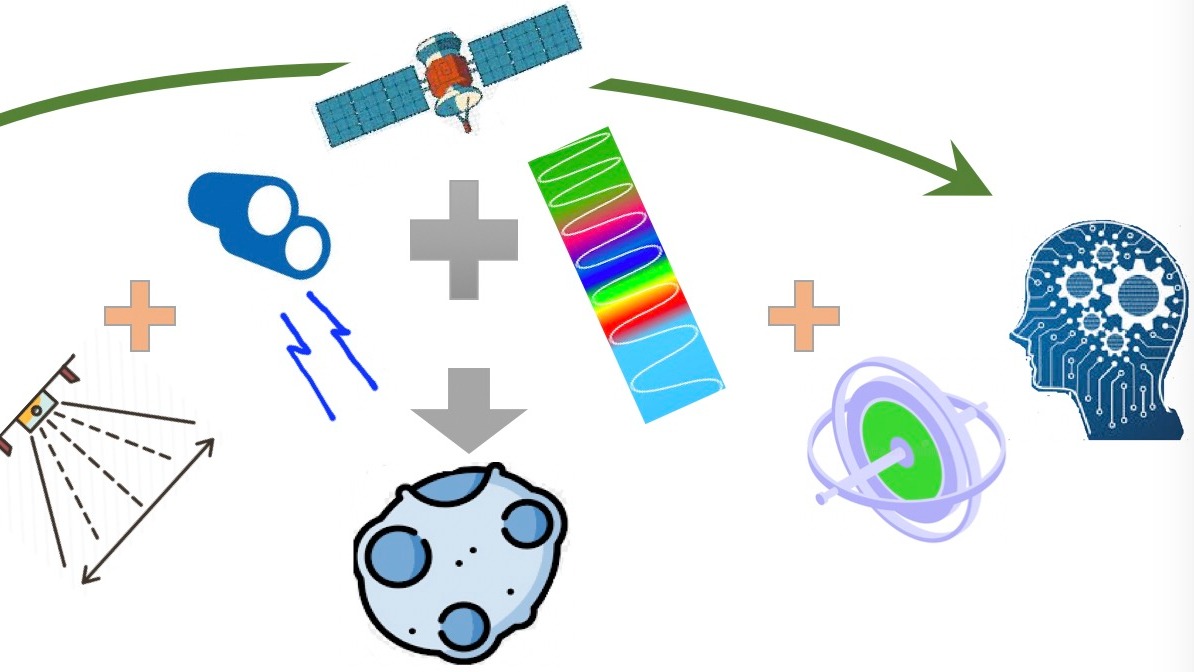

Space navigation around asteroids requires high autonomy due to the challenging dynamic environment from the irregular shape, tumbling motion, large uncertainties, etc. It’s very difficult to obtain good navigation solutions with data from a single sensor or a single data processing technique. This research aims to fuse data from multiple onboard sensors to compensate for each sensor’s limitations and potential data failure, to generate highly robust and autonomous navigation solutions for different mission scenarios. Firstly, with the input images from multiple spectral bands such as Visible, Near and Thermal Infrared ones, we will explore the scheme of fusing the sensor data either at the feature level or decision level using Convolutional Neural Networks (CNN) and Recurrent Neural Networks (RNN) for image processing (IP) and time-series data analysis, with either feature-based or appearance-based methods or the combination of the two, to generate high-quality segmentations and features extractions for pose estimation including both position and attitude. Secondly, the LiDAR measurements are added to the first fusion framework for depth information to generate more accurate range estimation and feature detections, to improve the quality of pose estimation. Lastly, with the obtained pose estimation, other types of sensors such as gyroscope and accelerometer from the Inertial Measurement Unit (IMU) will be integrated to generate navigation solutions. In addition, the RNN will be investigated to generate navigation solutions directly from the input images and LiDAR measurements. The synthetic data from PANGU/Blender, on-ground data from labs and in-flight data from previous missions (e.g. DART, Rosetta, OSIRIS-Rex) will all be used for the training, validation and testing. The model in the loop and hardware in the loop test will be performed to characterize the performance of each framework in terms of accuracy, efficiency and robustness for onboard applications.