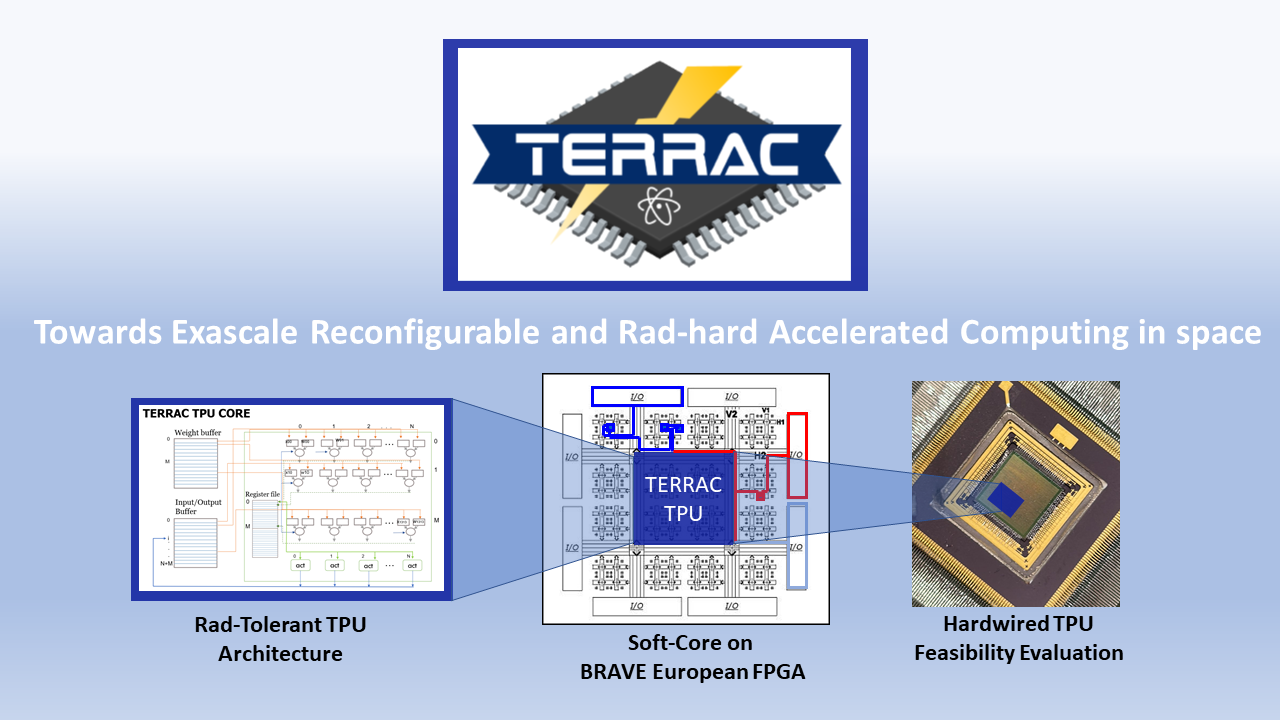

Today, an increasing number of space missions are demanding on-satellite high-performance computing capabilities. Radiation hardened Field Programmable Gate Arrays (FPGAs) allowed to implementation of several Digital Signal Processing (DSP) and Computer Vision (CV) designs with sufficient performance and programmability. However, to further enhance computing capabilities and permit the effective implementation of Vision-Based Navigation (VBN) algorithms, an ad-hoc HW accelerator able to elaborate multi-dimensional arrays (tensors) is needed. Tensors are fundamental units to store data such as the weights of a node in a neural network. They perform basic math operations such as addition, element-wise multiplication, and matrix multiplication. This HW accelerator, defined as Tensor Processing Unit (TPU), is an architecture customized for image elaboration algorithms and machine learning. It can manage massive multiplications and additions at high speed with a limited design area and power consumption. Several design strategies investigated the efficient implementation of TPU on FPGA architectures by improving the pipeline strategy and resource sharing towards the TPU processing elements (PEs) or by unifying the tensor computation kernel. Other solutions investigated the optimization of thin matrix multiplication with PE element rerouting. Nowadays, there are not any available design solutions for radiation-hardened TPU for FPGAs or ASICs having high performance and being radiation-hardened.