Video cameras with temporal resolutions higher than ~1 ms are heavy, bulky and expensive. They have high data rates and require cooling, and imaging at high frame rates often requires high-voltage intensifiers that are equally inconvenient. Such camera systems can be used on the ground but are impractical in space.

To get high-speed imaging in space, we propose to study the use of so-called Dynamic Visions Sensors (DVS), also known as neuromorphic cameras – or event cameras. The cameras are small, with low power consumption, modest data rates, and high light sensitivities, achieved by reading out individual pixels when the photon flux changes, thereby avoiding the read-out of complete frames. The technology is rapidly developing with the current version of cameras reaching up to ~10-microsecond temporal resolution [1], ideal for detecting fast, localized processes such as lightning, meteors and space debris. If on a moving platform such as a spacecraft, they can detect boundaries and edges of land surfaces and inland/coastal waters, or structures on planetary surfaces during autonomous landing.

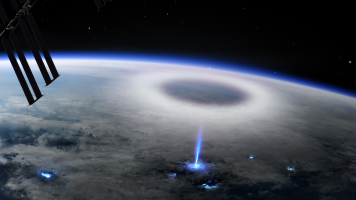

We propose to demonstrate the advantages of the technology for imaging the fast flashes of lightning at the top of thunderstorm clouds [2,3], where the high temporal resolution of the cameras is an advantage. Such measurements have the potential to further our understanding of upper-atmospheric physics that could ultimately assist in improving climate, atmosphere and weather models [4]. Initially, we will evaluate the feasibility by observing high-voltage laboratory discharges and natural lightning and later we seek to test in space in conjunction with the July 2023 launch of the Danish astronaut Andreas Mogensen to the ISS. Finally, we will develop a roadmap and a conceptual breadboard for a lightning imager intended for use on a space platform. The project seeks funding for 18 months for engineering and technical support salary and the purchase of DVS cameras.

- https://inivation.com/wp-content/uploads/2020/05/White-Paper-May-2020.pdf

- Neubert, T., O. Chanrion, K. Dimitriadou, M. Heumesser, I. Lundgaard Rasmussen, Lasse, V. Reglero and N. Østgaard, Observations of the onset of a blue jet into the stratosphere, Nature, 589, 371-375, 2021.

- Chanrion, O., T. Neubert, A. Mogensen, Y. Yair, M. Stendel, N. Larsen and R. Singh, Profuse activity of blue electrical discharges at the tops of thunderstorms, Geophys. Res. Lett., 44, https://doi: 10.1002/2016GL071311, 2017.

- ESA White Paper, HRE Strategy exercise 2021-2022, Section 2.6, https://esamultimedia.esa.int/docs/HRE/07_PhysicalSciences_Earth_Observ…

- Goodman, S. et al. The GOES-R Geostationary Lightning Mapper (GLM). Atmos. Res. 125–126, 34–49 (2013). https://doi.org/10.1016/j.atmosres.2013.01.006

- Yang, J., et al. Introducing the new generation of Chinese geostationary weather satellites – Fengyun 4 (FY-4). Bull. American Meteo. Soc. 98, 1637–1658 (2016). https://doi:10.1175/BAMS-D-16-0065.1

- Holmlund, K. et al. Meteosat Third Generation (MTG): continuation and innovation of observations from geostationary orbit. Bull. American Meteo. Soc. 102, E990–E1015 (2021). https://doi.org/10.1175/BAMS-D-19-0304.1

- niVation current generation devices. https://inivation.com/wp-content/uploads/2021/08/2021-08-iniVation-devices-Specifications.pdf

- G. Gallego et al., “Event-based Vision: A Survey,” arXiv.org, Apr. 17, 2019. https://arxiv.org/abs/1904.08405 (accessed Apr. 05, 2022).

- G. Cohen et al., “Event-based Sensing for Space Situational Awareness,” The Journal of the Astronautical Sciences, vol. 66, no. 2, pp. 125–141, Jan. 2019, doi: 10.1007/s40295-018-00140-5.

- K. Kamiński, G. Cohen, T. Delbruck, M. Żołnowski, and M. Gędek, “Observational evaluation of event cameras performance in optical space surveillance,” ESA Proceedings Database. https://conference.sdo.esoc.esa.int/proceedings/neosst1/paper/475 (accessed Apr. 05, 2022).

- T.-J. Chin, S. Bagchi, A. Eriksson, and A. van Schaik, “Star Tracking Using an Event Camera,” Jun. 2019. Accessed: Apr. 05, 2022. [Online]. Available: http://dx.doi.org/10.1109/cvprw.2019.00208

- S. Roffe, H. Akolkar, A. D. George, B. Linares-barranco, and R. Benosman, “Neutron-Induced, Single-Event Effects on Neuromorphic Event-based Vision Sensor: A First Step Towards Space Applications,” arXiv.org, Jan. 29, 2021. https://arxiv.org/abs/2102.00112 (accessed Apr. 05, 2022).

- Y. Nozaki and T. Delbruck, “Temperature and Parasitic Photocurrent Effects in Dynamic Vision Sensors,” IEEE Transactions on Electron Devices, vol. 64, no. 8, pp. 3239–3245, Aug. 2017, http://doi:10.1109/ted.2017.2717848

- Chanrion, O., T. Neubert, I. L. Rasmussen, C. Stoltze, D. Tcherniak, N. C. Jessen, J. Polny, P. Brauer, J. E. Balling, S. S. Kristensen, S. Forchhammer, P. Hoffmeyer, P. Davidsen, O. Mikkelsen, D. B. Hansen, D. D.V. Bhanderi, C. G. Petersen, M. Lorenzen. The Modular Multispectral Imaging Array (MMIA) of the ASIM payload on the International Space Station, Space Sci. Rev., 215: 28, 2019. https://doi.org/10.1007/s11214-019-0593-y