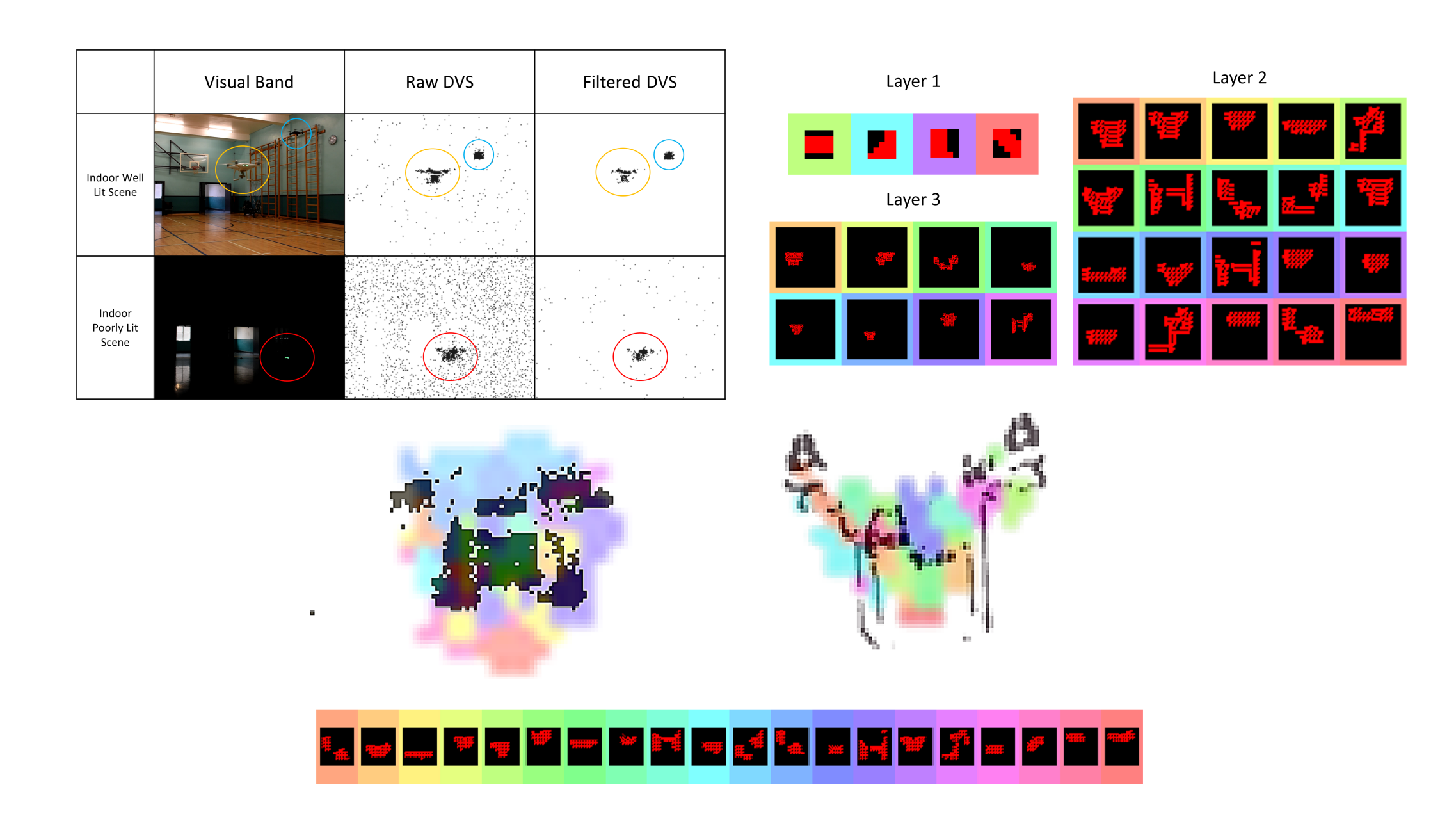

Although DNNs are deployed in space applications, there are limitations to their use, due to the difficulty of porting DNNs to hardware that meets application computational requirements and can afford low power budget. In fact, despite the availability of dedicated DNN accelerators, the general consensus is that Von Neumann-based architectures have intrinsic limitations that makes the inference process inefficient. Neuromorphic (NM) processing provides an alternative to conventional computing architectures, as NM systems generate and propagate spikes, in an event-based fashion, as means of processing data. NM sensors produce spiking data that is processed through purposely designed spiking neural networks (SNN), which are asynchronous and exploit the sparsity of the data and its absence of redundancy, to guarantee lower processing latency and SWaP profile. Previous works in literature have demonstrated that event-based cameras can be successfully used for optical space imaging. This idea aims to demonstrate a proof of concept on the use of complex SNNs for in-space edge-computing detection and tracking of targets, based on spatio-temporal features, using event-based optical data from onboard sensors. As event-based cameras produce asynchronous spikes at very high rate, such sensors can easily highlight the outline of very fast (and very small) moving targets, with no motion blur. From there it is then possible to use the outer shape and inner texture of a detected moving target to identify it. Also, events can be aggregated into different integration times, to automatically extract unique temporal motion patterns, to suit the application context. The initial groundwork on event-based processing and control through an SNN trained in unsupervised learning has already demonstrated promising results. This work will also focus on targeting future deployment on real NM processors, like the Loihi chip, to create an end-to-end NM processing pipeline.