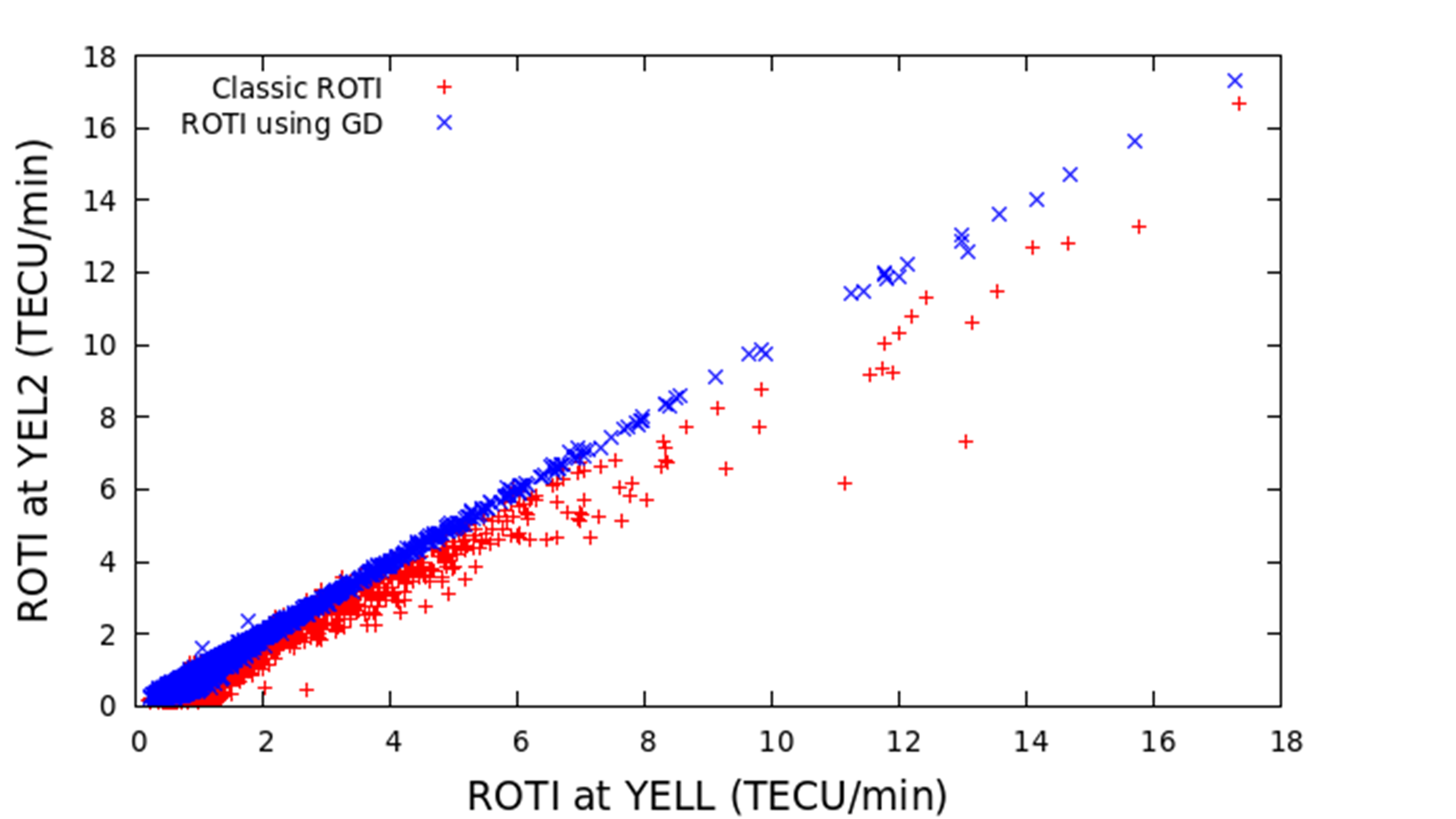

Radio signals crossing the ionosphere at high and low latitudes are regularly affected by ionospheric scintillation. Mid-latitudes are also impacted during geomagnetic storm periods. Scintillation highly degrades precise positioning and Safety of Live (SoL) applications that rely on transionospheric links, causing disruptions in the services or misleading information from undetected cycle-slips or increased noise in the signals. Currently, scintillation is monitored using high-end special purpose devices equipped with stable clocks. The cost of each device is large and few of them have been deployed. On the contrary, a wide network of geodetic ground receivers is already freely available since more than one decade. This network can monitor ionospheric activity trough the Rate of TEC Index computed from GNSS measurements, but there is not a straightforward relationship between ROTI and the actual scintillation. Moreover, ROTI values depend on the receiver tracking design [3]. Alternatively, gAGE/UPC has developed the postprocess Geodetic Detrending (GD) technique to accurately model GNSS signals using geodetic receivers operating at 1 Hz, being able to measure scintillation at any frequency [1][2][4]. The proposed idea is to apply the GD to obtain, in real-time and worldwide, genuine scintillation indexes based on the products from present/future real-time services such as the Galileo High Accuracy Service. The idea extends the currently localized amount of data from expensive receivers to a global scale with huge economical saving costs, since only data from existing public networks of permanent stations will be required. The main benefits are: Real-time monitoring and warning of scintillation activity for precise positioning and SoL applications. Availability of extended data sets for worldwide climatological studies[5] and global/regional model testing. Possible extension to low-cost receivers, reducing even further the current cost for scintillation monitoring.