Machine learning (ML) components automate tasks that are difficult to program; for example, Deep Neural Networks (DNNs) have been successful in enabling various autonomous tasks in automotive [4]. In space, ML is adopted to drive rovers semi-automatically [5], but ML-based automation for a broader range of tasks (e.g., satellites’ collision avoidance) is still at early stages [6]. In safety-critical systems, what prevents ML-based automation is the lack of assurance cases [7]. Currently, the safety of ML components is estimated through traditional methods (e.g., accuracy on a test set) rather than evidence supporting safety arguments (e.g., demonstrating that critical failures are infrequent). Simulators are typically used to generate inputs cost-effectively to enable such evaluations [8].

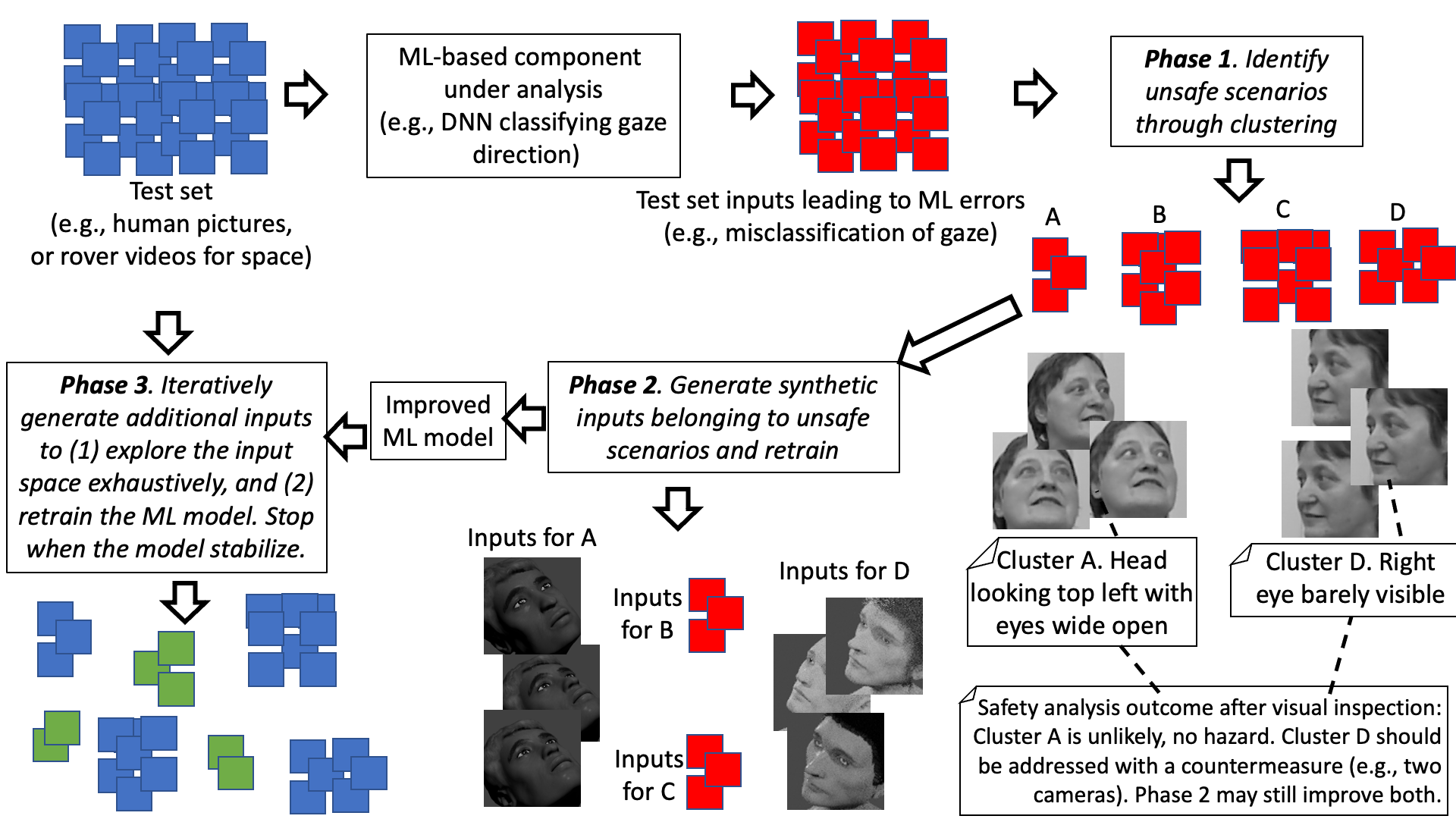

We propose TIA (Test, Improve, Assure), an automated, three-phase approach.

In Phase 1, the ML model is tested with a real-world test set, according to standard practice; TIA then clusters the inputs leading to ML failures (e.g., a misclassification). The commonalities among the clustered inputs are the causes of ML failures (e.g., snow on road markings in automotive).

In Phase 2, the ML model is retrained using new inputs belonging to each cluster; TIA efficiently derives these inputs using evolutionary algorithms combined with either simulators [9] or generative networks [13].

In Phase 3, TIA generates additional test inputs spread throughout the input space: clustering is used to determine the explored input space areas and generate inputs far from them.

Phase 1 identifies failure causes after traditional ML testing. Phases 2 and 3 improve the ML training process and are iterated till the ML performance is stable; indeed, if the input space has been efficiently sampled for testing and retraining, further sampling shouldn’t lead to the discovery of additional unsafe areas. We target DNNs because of their widespread usage but the TIA is model agnostic.