State of the Art (SotA) neural networks (NN), like graph NN, long short-term memory, and transformers, could provide higher performance and accuracy solutions for onboard data handling, fault detection isolation and recovery, autonomous driving, assisted landing, and star-tracking applications. Indeed, several applications in the space domain, both for onboard and on-ground segments, could benefit from parallel computing acceleration. These algorithms are usually accelerated by ad-hoc architectures, at the cost of modularity and reconfigurability, or programmable devices like GPUs at the cost of power consumption. There are existing solutions based on Myriad-2 VPU for accelerating convolutional NN in space environments. These architectures do not support all advanced NN and being software-based they do not perform at maximum energy efficiency such as hardware-based accelerators.

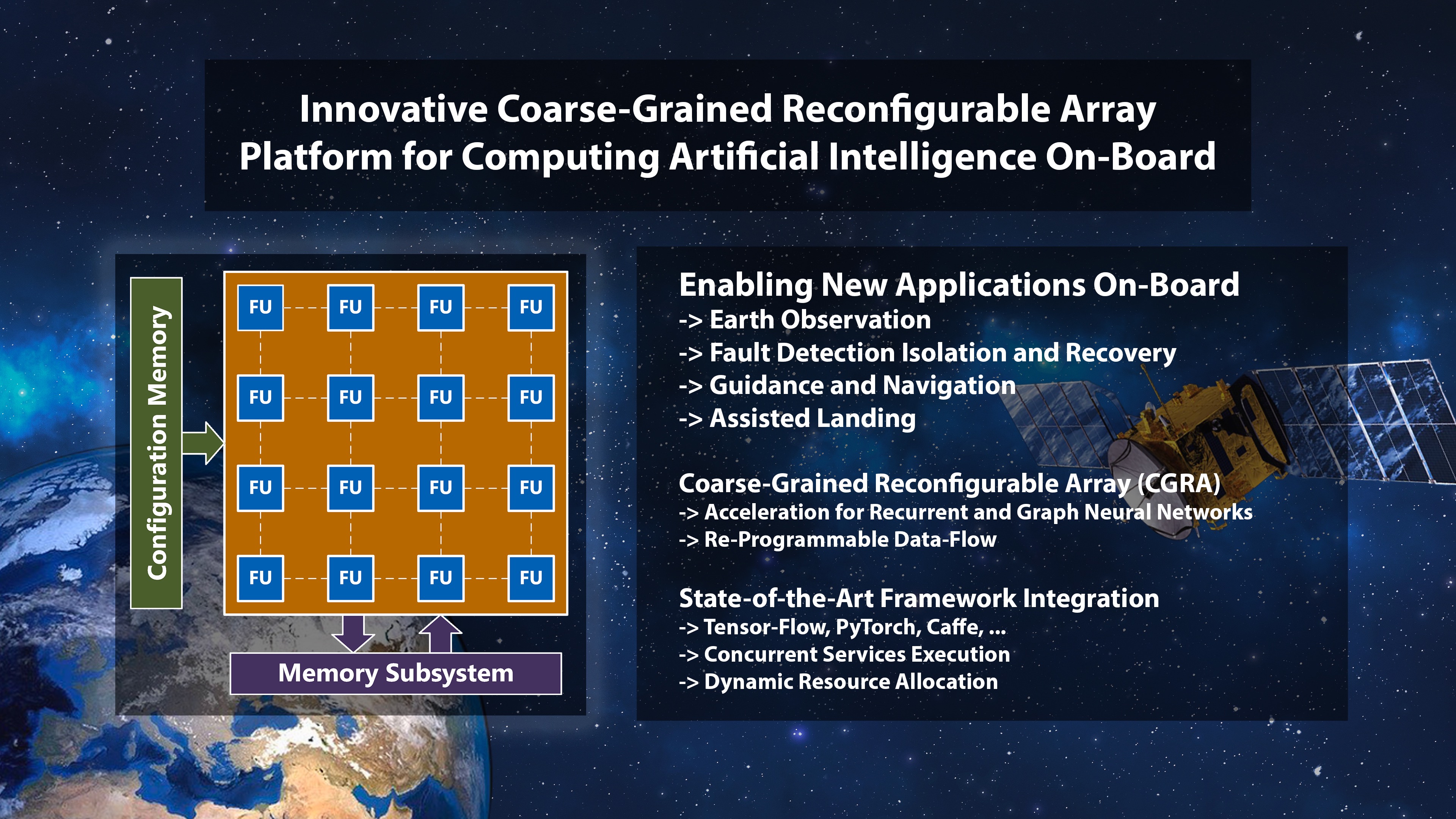

This research proposal aims to study an innovative reprogrammable low-power hardware based on Coarse-Grained Reconfigurable Array (CGRA). Unlike FPGAs, where fine-grained programmability control is excessive for the simple operations involved in data-path-oriented algorithms, a coarser reconfigurable platform would allow for higher-performance functional units (FU) directly synthesised on silicon. The re-programmability of CGRA resides in the data path between the FUs which can be configured accordingly to the application, reducing the physical path from inputs to outputs, hence closing the energy flexibility gap.

This study will additionally investigate a proper toolchain for easy implementation of NN applications, making the development workflow compatible with SotA tools, such as TensorFlow, Caffe, and PyTorch.

The aim of this research is to keep the architecture compatible with the existing software layers and to revolutionise how data-path-oriented algorithms are developed and deployed in many application scenarios.