Duration: 12 months

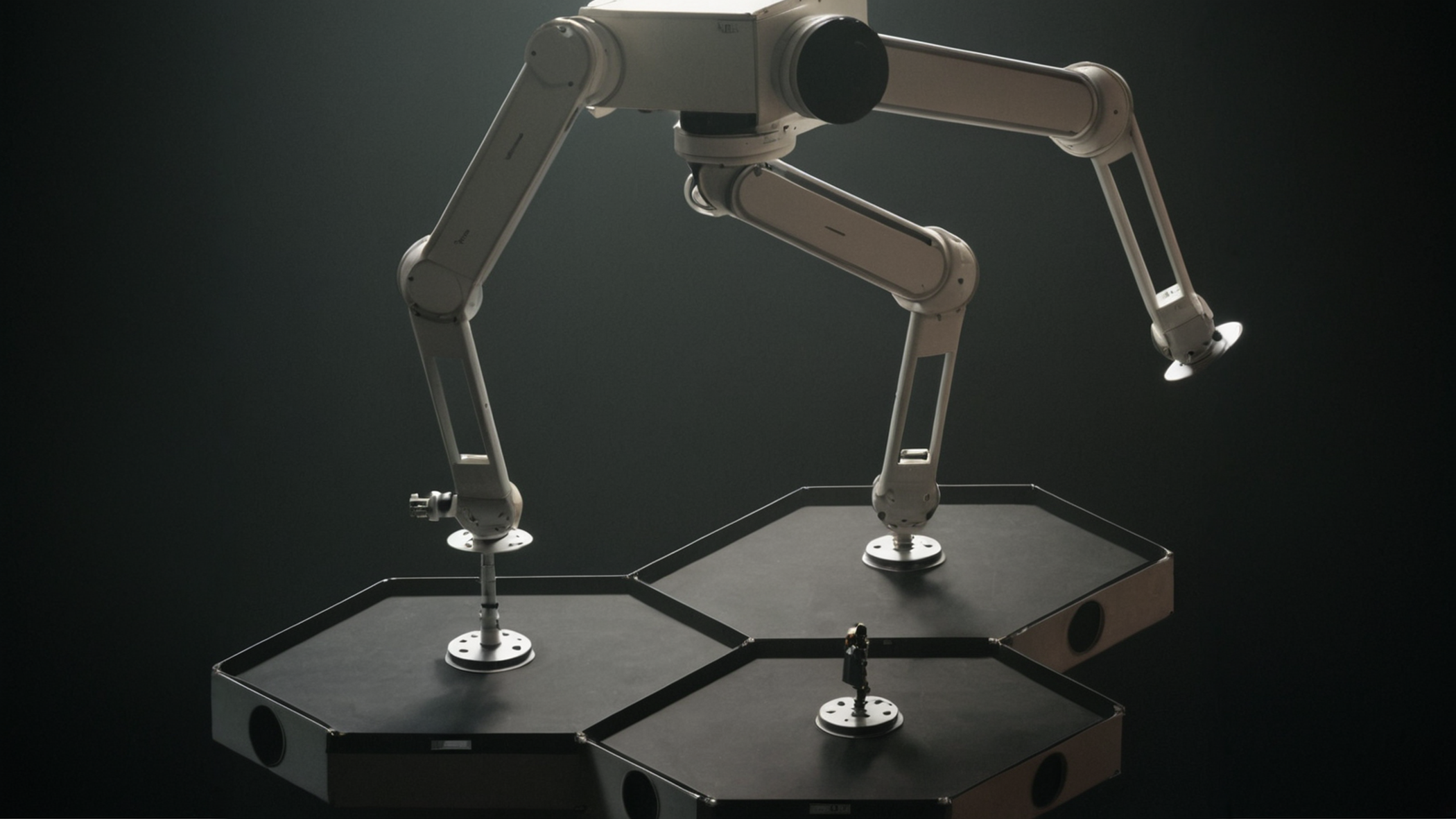

Our project - SKYWALKER: Safe Reinforcement Learning for Multi-Step Robotic Crawling and Navigation on Large Space Structures - explores an advanced control approach to enable robotic arms, such as the multi-arm installation robot in the MIRROR project [12], to autonomously navigate and reposition across large space structures for assembly, maintenance, and disassembly. Inspired by AI techniques we developed for autonomous planetary rovers [1,2,3], we will leverage deep reinforcement learning (DRL) to create safe, multi-step navigation policies for a robot capable of “crawling” across complex environments by using intermediate grapple points.

This DRL-based approach enables the robotic arm to adaptively plan and execute multi-step movements through trial-and-error learning, handling unpredictable conditions, complex structures, and dynamic environments without extensive modeling. By incorporating different data inputs such as proprioceptive information, and computer vision from RGB-D cameras and LiDAR, our system can recognize safe waypoints, adjust its trajectory to avoid obstacles, and prevent self-collisions. Key innovations include end-to-end policy training for whole-body robot control and integrated obstacle avoidance, tailored for high-degree-of-freedom robot systems in space.

The value of using DRL lies in its adaptability and scalability compared to model-based controllers. DRL policies can generalize across various structural configurations, reducing pre-programming needs and increasing resilience to unforeseen changes [7,8]. Our project will design a high-fidelity 3D physics simulation for training navigation and locomotion policies under realistic on-orbit conditions, with the potential to transfer these policies to a physical prototype [1,4]. Ultimately, this adaptable robotic navigation system will significantly reduce the need for human intervention in space operations.